Hi Quartz members,

Last week, Quartz got access to one of the most powerful artificial intelligence systems in the world. We’re not special: Anyone can now use OpenAI’s API, provided they explain what they plan to use it for and adhere to the company’s safety guidelines. The API launched in June 2020, but with a waitlist. This November, the company dropped the waitlist and expanded access.

The system, called GPT-3, takes text “prompts” and responds by predicting what comes next. Provide a few paragraphs of text, for example, then type “TL;DR” and GPT-3 writes a summary. It’s not hard to get GPT-3 to mess up, but it’s also not hard to teach it to do things that were only recently beyond the capability of the average computer.

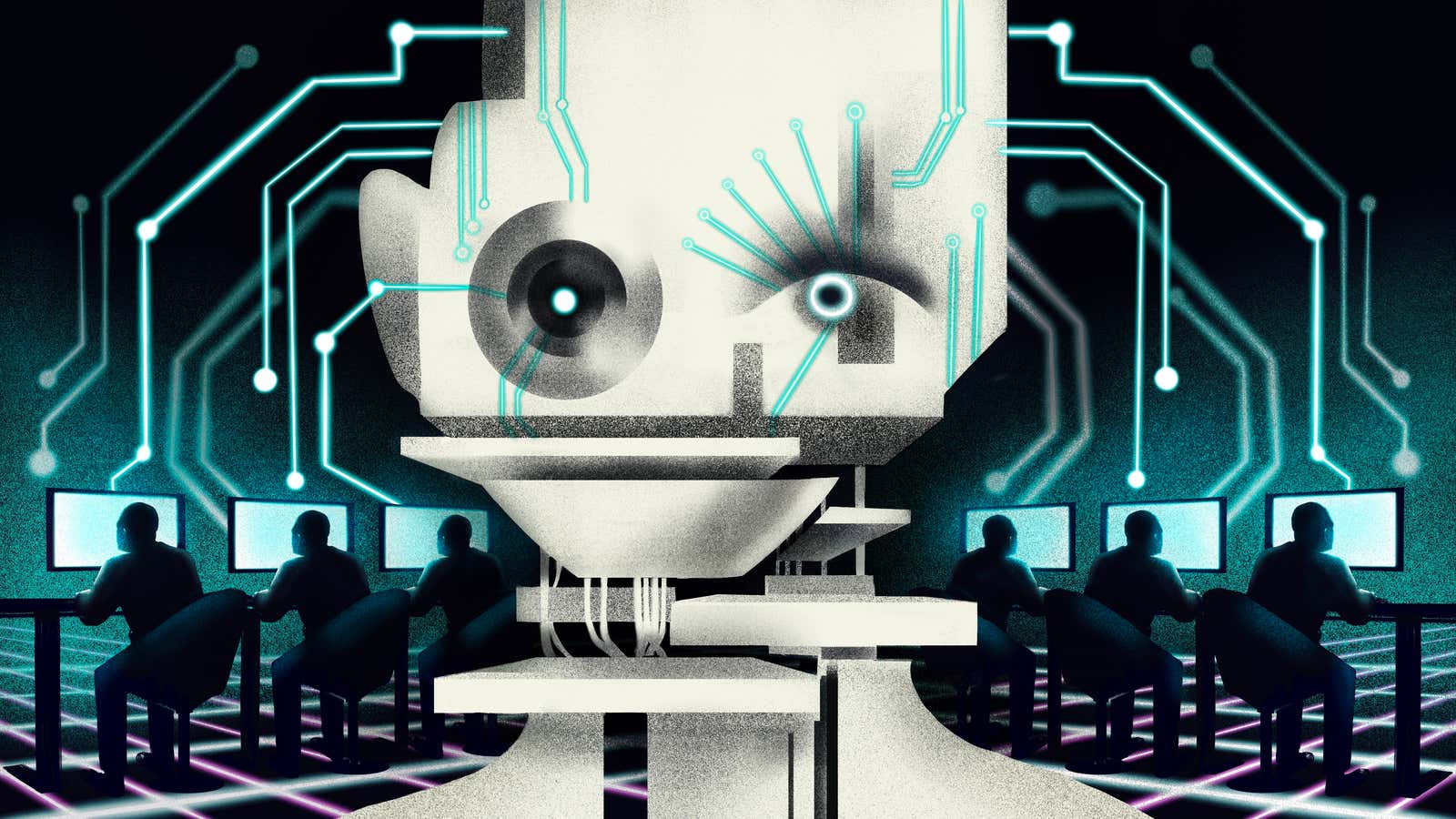

GPT-3 is not the only system of its kind—there are other large natural language systems available to experienced developers. But it’s easy enough to use that almost anyone can experiment with the technology. What happens when every software developer, and even people who can’t code, have access to advanced AI?

Meet GPT-3

GPT-3 takes text “prompts” and makes predictions—think of it as an incredibly advanced version of the autocomplete on your phone. Coders can use GPT-3 via its API but non-coders can experiment with it using the built-in web interface. It doesn’t remember: Ask it the same thing in two separate sessions and it won’t recall it’s already answered. Systems like this are trained by being fed large portions of the text on the internet, along with books and other sources. And that volume of training data allows it to get very good at filling in the next step in the pattern.

That formula—here’s some text, tell me what you think comes next—sounds limited, but allows for a lot of different interactions. You can chat with GPT-3 if you want to—or ask it questions, or ask it to tell you a story. But it’s even more interesting to provide some text and ask the model to make sense of it. I asked it to provide a “TL;DR” of the Wikipedia entry about GPT-3. Here’s how it summarized the first few sections:

The TL;DR of GPT-3 is that you can generate text in a way that’s extremely realistic, but you can’t really tell how realistic it is until you try to use it to do something.

That’s exactly right, based on my experience with the model. The responses I included here are better than the average answers the AI spat back out at me, but only by a little. The system is mostly coherent, makes plenty of mistakes, and sometimes makes your jaw drop. I showed it just a handful of examples of Quartz’s “By the digits” format that we often use in our emails and it immediately learned to mimic it. When I asked it to write a “By the digits” about the GPT-3 Wikipedia page, it got the format right:

175 billion: The number of machine learning parameters in GPT-3

The odd thing about GPT-3 is that it’s better at writing than at accuracy. It picked up the unusual sentence structure of “By the digits” from just a few examples but struggled to get its facts right. Computers are typically full of facts but short on creativity and flexibility; GPT-3 is the reverse.

Use case

Hundreds of apps are being built using OpenAI’s API; dozens of projects posted on ProductHunt, a site for tech launches, mention GPT-3. Here are some of the things it’s being used for:

📖 Summarizing: The app Genei uses GPT-3 to summarize documents to save readers time. Grok promises daily digests of Slack channels.

✏️ Writing: Several of the most upvoted projects on ProductHunt to mention GPT-3 involved automating the writing of marketing copy, including Copysmith (“GPT-3 powered content marketing that feels like magic”) and CopyAI (“GPT-3 powered tools to help you with your copywriting”).

🎮 Gaming: Fable Studio uses GPT-3 to create stories for its digital characters.

🖥️ Coding: GitHub Copilot uses GPT-3 to translate text into code. Pygma does something similar, translating what designers create in Figma, a design tool, into code.

Learn to code

The OpenAI API isn’t the only way to use GPT-3. Microsoft, which has invested $1 billion in OpenAI, provides access to some of its customers through its own APIs. And earlier this year Microsoft subsidiary GitHub released a tool called Copilot, which is trying to give developers with the experience of “pair programming” without needing a human partner. Instead of a colleague looking over your shoulder while you code—checking for bugs, making suggestions, reminding you of the syntax you’ve forgotten—it’s GPT-3, popping onto your screen with grayed out text.

Quartz tried CoPilot, too. It’s not perfect, but neither are humans. Together, though, the result is strikingly powerful, and unexpectedly delightful. Instead of turning to Google or Stack Overflow to look up the correct syntax or logic needed for a task, Copilot often suggests exactly what you need. You can even write plain-english descriptions of your intent for the script and the bot will suggest the code to do it, instantaneously.

When it fails, the results spark joy. For instance when we were updating the ingredient list for a Homemade Butterfingers recipe, it suggested the dadaist ingredient list of milk, sugar, flour, butter, vanilla, and chocolate repeated ad infinitum.

Danger, Will Robinson

OpenAI has put in place a variety of safeguards to ensure what it calls “responsible deployment” of GPT-3. It’s easy to get access to mess around with the AI, but to use it at scale developers have to go through a “production review”—akin to the process of getting an app admitted to the Apple app store—wherein OpenAI staff review what the application intends to do. The company doesn’t allow applications intended to influence politics, for example, or that distribute large amounts of auto-generated content on social media.

The API also includes filters that detect whether content might be harmful or sensitive. That helps developers avoid creating apps that say offensive things; it also lets the OpenAI team monitor potentially problematic uses. (The worst I came across experimenting with GPT-3 was when its summary of Star Wars called Luke Skywalker a whiny virgin.)

But some critics say the problems with systems like GPT-3 go well beyond malicious use and toxic content. In late 2020, AI researcher and ethicist Timnit Gebru was forced out of her job at Google over a paper she published, with coauthors, on the problems with large language models like GPT-3. They included the cost and carbon footprint of training them, which can run in the millions of dollars (OpenAI claims the process is getting more efficient).

And while access through an API lets more people use these models cheaply, it centralizes control of the technology—including how it can be used and what counts as “toxic” content. In a recent essay for the journal Interactions, Meredith Whittaker, a professor at NYU and co-founder of the AI Now Institute, argued that the field’s embrace of large language models like GPT-3 will further “allow [tech giants] to continue defining the terms and conditions of AI and AI research” at the expense of academics, activists, and workers.

🔮 Prediction

Developers looking to use AI models like GPT-3 have other options but they require some technical fluency. The biggest effect of the GPT-3 API will be to make it easier for people without machine learning expertise to quickly prototype an AI application, says Hilary Mason, co-founder of the AI gaming startup Hidden Door.

Mason says that OpenAI’s tools will allow people with an idea to quickly get a sense of whether AI could solve it by showing GPT-3 a few examples and seeing how it does. “I think what we’ll see is a flowering of people playing,” she says. “That’s incredibly important. I think we tend to underestimate what happens when we can play.”

Keep learning

- The simple economics of machine intelligence (HBR)

- Reflections on Foundation Models (Stanford)

- The danger of letting Google lead AI research (Quartz)

- Timnit Gebru’s paper on the dangers of large language models (and a summary in MIT Technology Review)

- The steep cost of capture (Meredith Whittaker)

- EleutherAI is a collective building open source alternatives to GPT-3

- HuggingFace is a startup offering developers access to machine learning models

- Andrew Ng’s course on “AI for everyone” is a good non-technical introduction (Coursera)

Sound off

How do you feel about AI?

I’m ready to team up with AI to get more done

Let AI do more so we can get that 15-hour workweek Keynes promised

This won’t end well, as any number of dystopian movies can attest

In last week’s poll about empathetic bosses, 44% of respondents said their boss was extremely compassionate. Looks like they’re ahead of the trend.

Have a great week,

—Walter Frick, executive editor (couldn’t get GPT-3 to write a good sign-off)

David Yanofsky contributed to this report.

One 🤖 thing

We gave GPT-3 Robert Frost’s poem “The Road Not Taken” (“Two roads diverged in a yellow wood…”) and asked it to explain briefly what it meant. Here is what it said:

The speaker is standing at a fork in the road. He can go down one of two paths. He cannot choose both. He looks down one and sees that it is overgrown with weeds and grass. He looks down the other and sees that it is not. He chooses the less traveled path. The poem is about how choices matter. When he looks back on his life, he will be glad he took the less traveled path.