Nvidia sees “two simultaneous transitions” in tech—and investors are psyched

The AI chipmaker sees surging demand for its data center offerings amid the generative AI boom

With companies like Google and Microsoft racing to integrate artificial intelligence into their services, there’s already one clear winner: Nvidia, which makes the chips powering the AI boom.

Suggested Reading

For the three months ending April 30, Nvidia’s revenue rose 19% from the previous quarter, while profit jumped 44%. (On a year-over-year basis, revenue was down 13%, but lower expenses produced a 26% rise in net income.)

Related Content

The semiconductor company’s shares surged nearly 25% to $381.50 in after-hours trading, following the release of the first-quarter results. The stock was already up 113% so far this year.

The chips are not cheap, either. Microsoft, for instance, uses tens of thousands of Nvidia’s A100 graphic chips to fuel ChatGPT, which reportedly cost several hundred millions of dollars, according to Bloomberg.

“The computer industry is going through two simultaneous transitions—accelerated computing and generative AI,” said Jensen Huang, founder and CEO of Nvidia.

Both trends are driving Nvidia’s results. Revenue from its data center business reached a quarterly record of $4.28 billion, up 18% from the previous quarter and 14% from the first quarter of 2022.

Why are Nvidia’s chips so sought after?

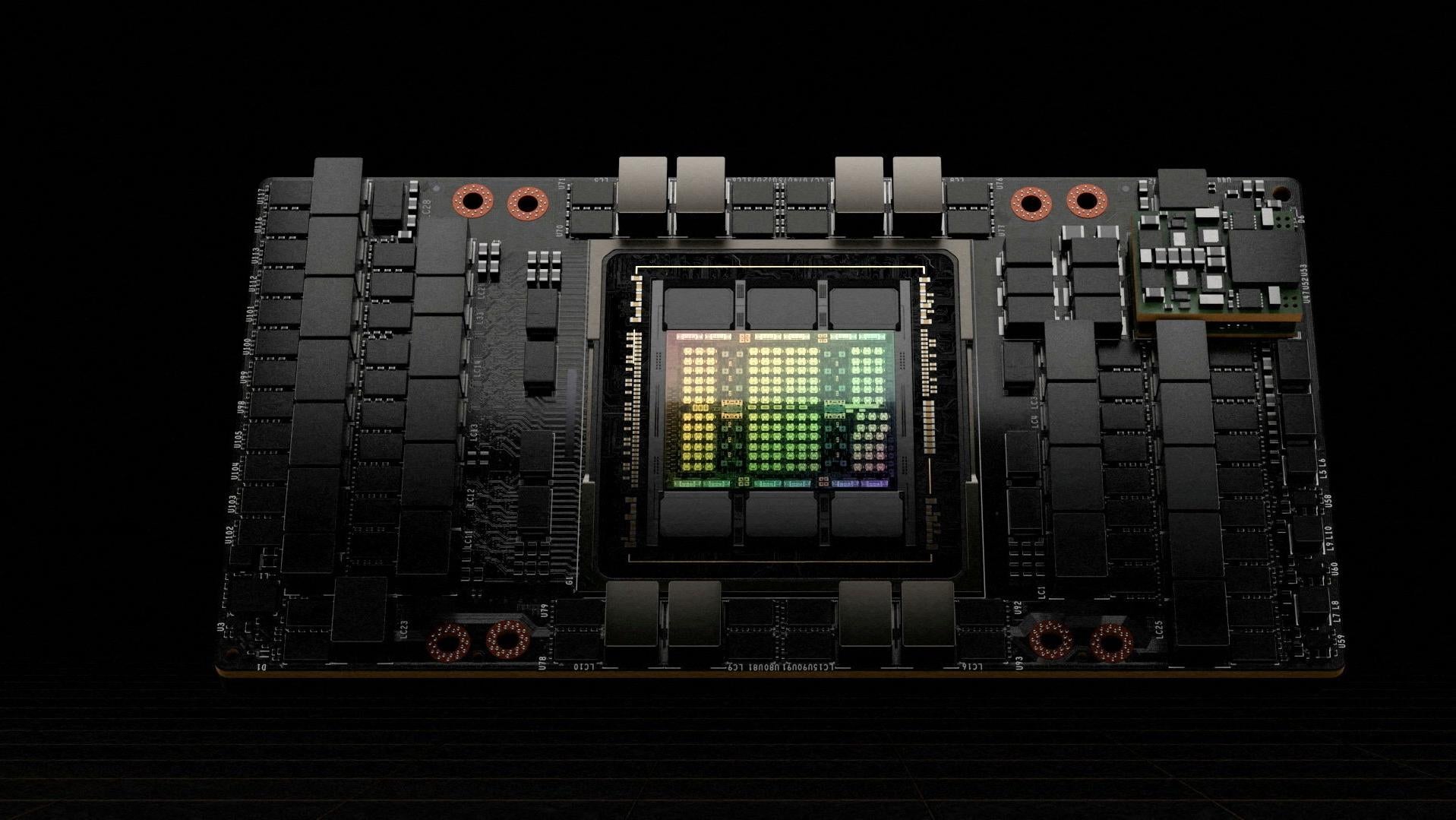

Nvidia owns most of the market for graphic processing units, or GPUs. Known for graphics and video rendering, GPUs are becoming more popular for AI use due to their ability to process many pieces of data at once.

The global AI chip market is projected to grow from $17 billion in 2022 to $227 billion by 2032, according to Precedence Research, a market research firm.

The future of the world’s data centers

On a May 24 conference call going over the quarterly results, Huang said the world’s data centers are moving toward accelerated computing, adding that Nvidia has been preparing for this moment for the past 15 years.