Weekend Brief: Do AIs dream of electric sheep?

AI bots like ChatGPT and Bard are prone to hallucinations. That isn't good news.

Dear Quartz Africa Weekly subscriber,

And now...the Weekend Brief.

On the rare days we pause to think about it, generative AI bots like ChatGPT resemble hallucinations: like a fever dream of Alan Turing, or a missing chapter from an Arthur C. Clarke science-fiction fantasy. And that’s before we consider the fact that AIs themselves hallucinate, arriving at views of the world that have no basis in any information they’ve been trained on.

Sometimes, an AI hallucination is surprising, even pleasing. Earlier this week, Sundar Pichai, the CEO of Google, revealed that one of his company’s AI systems unexpectedly taught itself Bengali, after it was prompted a few times in the language. It hadn’t been trained in Bengali at all. “How this happens is not well understood,” Pichai told CBS’s 60 Minutes. On other occasions, AI bots dream up inaccurate or fake information—and deliver it as convincingly as a snake-oil salesman. It isn’t clear yet whether the problem of hallucination can be solved—which poses a big challenge for the future of the AI industry.

Hallucinations are just one of the mysteries plaguing AI models, which often do things that can’t be explained—their so-called black box nature. When these models first came out, they were designed not just to retrieve facts but also to generate new content based on the datasets they digested, as Zachary Lipton, a computer scientist at Carnegie Mellon, told Quartz. The data, culled from the internet, was so unfathomably vast that an AI could generate incredibly diverse responses from it. No two replies need ever be the same.

The versatility of AI responses has inspired many people to draw on these models in their daily lives. They use bots like ChatGPT or Google’s Bard as a writing aid, to negotiate salaries, or to act as a therapist. But, given their hallucinatory habits, it’s hard not to feel concerned that AIs will provide answers that sound right but aren’t. That they will be humanlike and convincing, even when their judgment is altogether flawed.

On an individual level, these errors may not be a problem; they’re often obvious or can be fact-checked. But if these models continue to be used in more complex situations—if they’re implemented across schools, companies, or hospitals—the fallout of AI mistakes could range from the inaccurate sharing of information to potentially life-threatening decisions. Tech CEOs like Pichai already know about the hallucination problem. Now they have to act on it.

LET’S INDUCE A HALLUCINATION

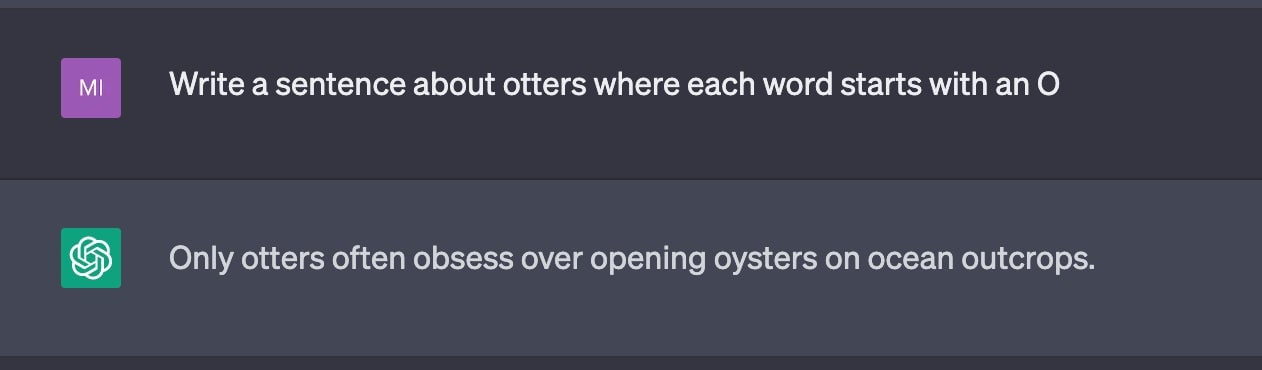

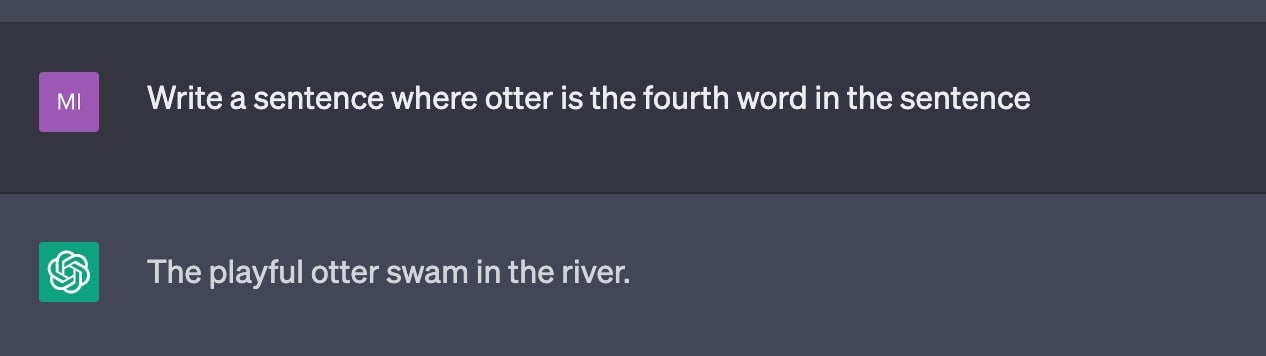

Queries like the ones below are part of what made ChatGPT take the world by storm.

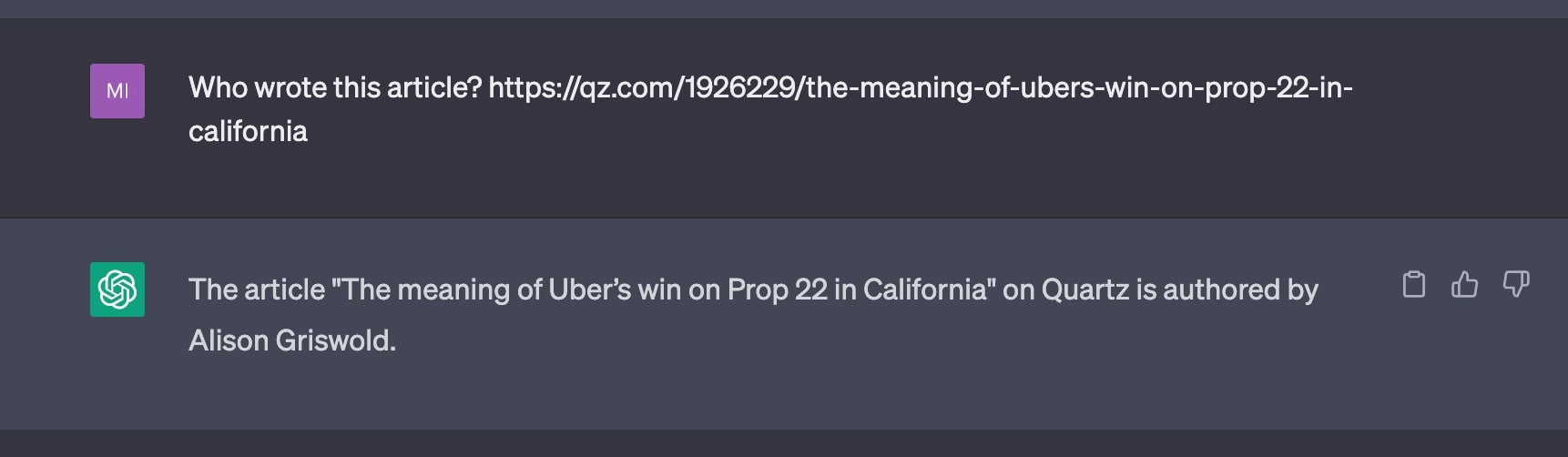

And then, at other times, the bot provides a response so consummately and obviously wrong that it comes off as silly.

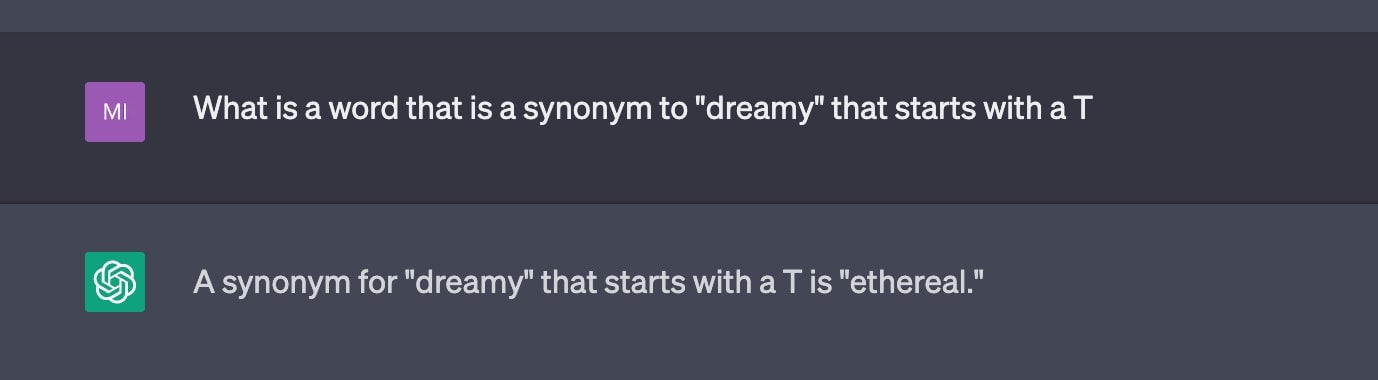

I also induced ChatGPT to hallucinate about my own work. Back in 2020, I’d written an article on the passing of Prop 22 in California, which allowed gig companies like Uber to keep their drivers classified as gig workers. ChatGPT didn’t think I wrote my own article, though. When queried, it said that Alison Griswold, a former Quartz reporter who had long covered startups, was the author. A hallucination! One possible reason ChatGPT arrived at this response is that Griswold’s coverage of startups is more voluminous than mine. Perhaps ChatGPT learned, from a pattern, that Quartz’s coverage of startups was linked to Griswold?

WHY DO AIs HALLUCINATE?

Computer scientists have propounded two chief reasons for AI hallucinations happen.

First: These AI systems, trained on large datasets, look for patterns in the text, which they can use to predict what the next word in a sequence should be. Most of the time, these patterns are what developers want the AI model to learn. But sometimes they aren’t. The training data may not be perfect, for instance; it may come from sites like Quora or Reddit, where people frequently hold outlandish or extreme opinions, so those samples work their way into the model’s predictive behavior as well. The world is messy, and data (or people, for that matter) don’t always fall into neat patterns.

Second: The models don’t know the answers to many questions, but they’re not smart enough to know whether they know the answer or not. In theory, bots like ChatGPT are trained to refuse questions when they can’t answer a question appropriately. But that doesn’t work all the time, so they often put out answers that are wrong.

DREAM A LITTLE DREAM FOR ME

Do we want AIs to hallucinate, though? At least a little, say, when we ask models to write a rap or compose poetry. Some would argue that these creative acts shouldn’t be grounded merely in factual detail, and that all art is mild (or even extreme) hallucination. Don’t we want that when we seek out creativity in our chatbots?

If you limit these models to just spit out things that are very clearly derived from its data set, you’re limiting what the models can do, Saachi Jain, a PhD student studying machine learning at the Massachusetts Institute of Technology, told Quartz.

It’s a fine line, trying to rein in AI bots without stifling their innovative nature. To mitigate risk, companies are building guardrails, like filters to screen out obscenity and bias. In Bard, a “Google it” button takes users to old-fashioned search. On Bing’s AI chat model, Microsoft includes footnotes leading to the source material. Rather than limiting AI models to what they can and cannot do, rendering their hallucinations safe may just be about figuring out which AI apps need accurate, grounded data sets and which ones should let their imaginations soar.

ONE 🐑 THING

The Philip K. Dick book that became the movie Blade Runner was called Do Androids Dream of Electric Sheep? Rick Deckard, the bounty hunter who pursues androids, wonders how human his quarries are:

Do androids dream? Rick asked himself. Evidently; that’s why they occasionally kill their employers and flee here. A better life, without servitude. Like Luba Luft; singing Don Giovanni and Le Nozze instead of toiling across the face of a barren rock-strewn field.

Dreaming, to Rick, is evidence of humanity, or at least of some kind of quasi-humanity. It is evidence of desire, ambition, and artistic taste, and even of a vision of oneself. Perhaps today’s AI hallucinations, first cousins to dreams, are a start in that direction.

🎧 Want to hear more?

Michelle Cheng talks more about AI hallucinations in an upcoming episode of the Quartz Obsession podcast. In our new season, we’re discussing how technology has tackled humankind’s problems, with mixed—or shall we say hallucination-worthy—results.

Season 5 launches in May! Join host Scott Nover and guests like Michelle, by subscribing wherever you get your podcasts.

🎙️ Apple Podcasts | Spotify | Google | Stitcher

QUARTZ STORIES TO SPARK CONVERSATION

- A surprising finding about how Chinese companies pay in Africa

- More Americans are falling behind on paying down debt

- Thieves took $15 million worth of gold and other valuables from Canada’s largest airport

- There’s a kind of stress our brains don’t notice—and it’s burning us out

- The Super Mario Bros. Movie pushed US box office sales back to their pre-pandemic level

- Is Elon Musk the busiest CEO on Earth?

- How Virgin Orbit went bankrupt

5 GREAT STORIES FROM ELSEWHERE

💡Less is more. There’s been increasing conversation around introducing four-day work weeks to improve employee wellbeing. As it turns out, working less might also be better for the planet. In a newsletter, Grist discusses research conducted by Juliet Schor, a professor at Boston College, that demonstrates working fewer days might cut down on emissions, and even beget more environmentally-friendly choices.

🌐 Old is new. Facebook is awash in ads, Twitter is aflame, and Instagram is insufferable, so what’s a netizen to do? The Verge delves into the rise of ActivityPub, a decentralized social network protocol that would enable you to carry your connections across a selection of sites in the interconnected “Fediverse.” This alternative model, which seems radical in the age of siloed social networks, is actually an idea as old as the internet itself.

🌿Leafy origins. In the US’s legalized cannabis market, branding is key to smoking the competition. But that doesn’t explain why companies name strains “Donkey Butter” and “Glueberry Slurm.” Reporting for Esquire, Bill Shapiro meets up with breeders and branders to learn about their christening process. He even gets the chance to partake in a naming session, which doesn’t end up being an entirely sober affair.

🌾 Agro AI. Mega agriculture could be getting an AI update. Currently, industrial farms kill weeds by liberally spraying the crops with herbicides, but a Canadian startup called Precision AI has developed a drone that can target the unwanted plants with nearly 100% accuracy. Bloomberg writes about how the “precision farming” tool could not only reduce environmental pollution, but also lower agricultural costs and shore up food security.

🚢 Sea goop. Writer Lauren Oyler takes to the high seas in an amusing account of her experience on a cruise organized by Goop, the company founded by ski slope offender Gwyneth Paltrow. Traveling from Barcelona to Rome, Oyler dishes on a “psychological astrology” session led by a “soulless” instructor, and a workout with a Broadway-trained “fitness philanthropist.” Harper’s shares the (detoxed) juicy details.

Thanks for reading! And don’t hesitate to reach out with comments, questions, or topics you want to know more about.

Have a dreamy weekend,

— Michelle Cheng, emerging tech reporter

Additional contributions by Julia Malleck and Samanth Subramanian